- 选择4核8G(master)、8核16G(node1)、8核16G(node2) 三台机器,按量付费进行实验,CentOS7.9

- 安装Docker

- 安装Kubernetes

- 安装KubeSphere前置环境: nfs和监控

- 安装KubeSphere

- master

- node1

- node2

文章目录

- 1.安装Docker

- 2.安装Kubernetes

- 3.安装kubelet、kubeadm、kubectl

- 4.master上安装镜像

- 5.初始化master节点

- 5.1 记录关键信息

- 5.2 安装网络插件

- 6.安装KubeSphere前置环境

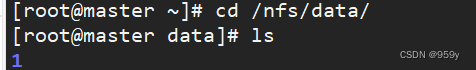

- 6.1 安装nfs文件系统

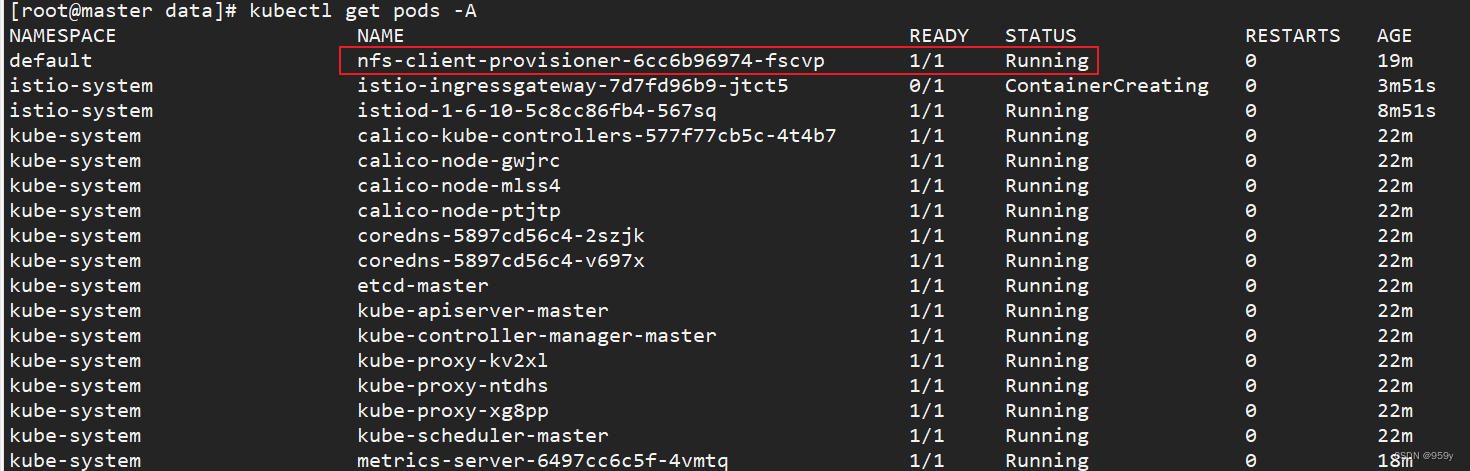

- 6.2 配置默认存储

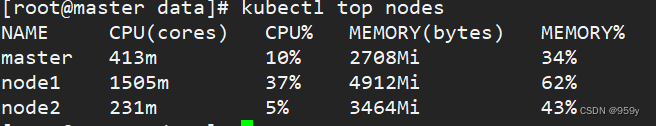

- 6.3 集群指标监控组件

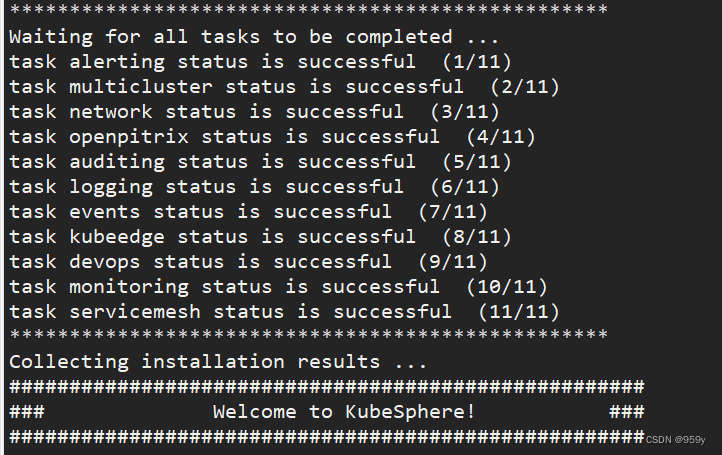

- 7.安装KubeSphere

- 7.1 下载核心配置文件

- 7.2 执行配置文件

- 7.3 配置文件

- 7.3.1 kubesphere-installer.yaml

- 7.3.2 cluster-configuration.yaml

1.安装Docker

yum remove docker*

yum install -y yum-utils

#配置docker的yum地址

yum-config-manager \

--add-repo \

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#安装指定版本

yum install -y docker-ce-20.10.7 docker-ce-cli-20.10.7 containerd.io-1.4.6

# 启动&开机启动docker

systemctl enable docker --now

# docker加速配置

mkdir -p /etc/docker

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://82m9ar63.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

systemctl daemon-reload

systemctl restart docker

2.安装Kubernetes

#设置每个机器自己的hostname

hostnamectl set-hostname master

hostnamectl set-hostname node1

hostnamectl set-hostname node2

# 将 SELinux 设置为 permissive 模式(相当于将其禁用)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

#关闭swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

#允许 iptables 检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system

3.安装kubelet、kubeadm、kubectl

#配置k8s的yum源地址

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#安装 kubelet,kubeadm,kubectl

sudo yum install -y kubelet-1.20.9 kubeadm-1.20.9 kubectl-1.20.9

#启动kubelet

sudo systemctl enable --now kubelet

#所有机器配置master域名

echo "172.31.0.4 k8s-master" >> /etc/hosts

4.master上安装镜像

sudo tee ./images.sh <<-'EOF'

#!/bin/bash

images=(

kube-apiserver:v1.20.9

kube-proxy:v1.20.9

kube-controller-manager:v1.20.9

kube-scheduler:v1.20.9

coredns:1.7.0

etcd:3.4.13-0

pause:3.2

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/$imageName

done

EOF

chmod +x ./images.sh && ./images.sh

5.初始化master节点

kubeadm init \

--apiserver-advertise-address=172.31.0.4 \

--control-plane-endpoint=k8s-master \

--image-repository registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images \

--kubernetes-version v1.20.9 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=192.168.0.0/16

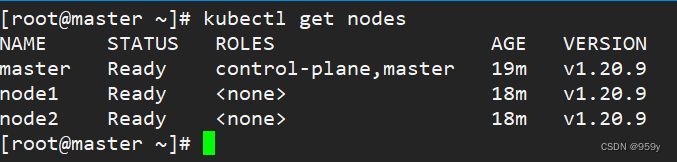

5.1 记录关键信息

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join k8s-master:6443 --token 3vckmv.lvrl05xpyftbs177 \

--discovery-token-ca-cert-hash sha256:1dc274fed24778f5c284229d9fcba44a5df11efba018f9664cf5e8ff77907240 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join k8s-master:6443 --token 3vckmv.lvrl05xpyftbs177 \

--discovery-token-ca-cert-hash sha256:1dc274fed24778f5c284229d9fcba44a5df11efba018f9664cf5e8ff77907240

5.2 安装网络插件

curl https://docs.projectcalico.org/v3.20/manifests/calico.yaml -O

kubectl apply -f calico.yaml

6.安装KubeSphere前置环境

6.1 安装nfs文件系统

master:

# 在每个机器。

yum install -y nfs-utils

# 在master 执行以下命令

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports

# 执行以下命令,启动 nfs 服务;创建共享目录

mkdir -p /nfs/data

# 在master执行

systemctl enable rpcbind

systemctl enable nfs-server

systemctl start rpcbind

systemctl start nfs-server

# 使配置生效

exportfs -r

#检查配置是否生效

exportfs

node:

yum install -y nfs-utils

showmount -e 172.31.0.4

mkdir -p /nfs/data

mount -t nfs 172.31.0.4:/nfs/data /nfs/data

6.2 配置默认存储

配置动态供应的默认存储类

## 创建了一个存储类

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

parameters:

archiveOnDelete: "true" ## 删除pv的时候,pv的内容是否要备份

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/nfs-subdir-external-provisioner:v4.0.2

# resources:

# limits:

# cpu: 10m

# requests:

# cpu: 10m

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 172.31.0.4 ## 指定自己nfs服务器地址

- name: NFS_PATH

value: /nfs/data ## nfs服务器共享的目录

volumes:

- name: nfs-client-root

nfs:

server: 172.31.0.4

path: /nfs/data

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

6.3 集群指标监控组件

metrics-server.yaml:

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --kubelet-insecure-tls

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/metrics-server:v0.4.3

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

periodSeconds: 10

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

kubectl top nodes

7.安装KubeSphere

7.1 下载核心配置文件

wget https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/kubesphere-installer.yaml

wget https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml

7.2 执行配置文件

通过上面的wget下载的配置文件, 通过kubectl apply -f x.yaml执行:

kubectl apply -f kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

查看进度:

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

解决etcd监控证书找不到问题

kubectl -n kubesphere-monitoring-system create secret generic kube-etcd-client-certs --from-file=etcd-client-ca.crt=/etc/kubernetes/pki/etcd/ca.crt --from-file=etcd-client.crt=/etc/kubernetes/pki/apiserver-etcd-client.crt --from-file=etcd-client.key=/etc/kubernetes/pki/apiserver-etcd-client.key

7.3 配置文件

7.3.1 kubesphere-installer.yaml

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: clusterconfigurations.installer.kubesphere.io

spec:

group: installer.kubesphere.io

versions:

- name: v1alpha1

served: true

storage: true

scope: Namespaced

names:

plural: clusterconfigurations

singular: clusterconfiguration

kind: ClusterConfiguration

shortNames:

- cc

---

apiVersion: v1

kind: Namespace

metadata:

name: kubesphere-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: ks-installer

namespace: kubesphere-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ks-installer

rules:

- apiGroups:

- ""

resources:

- '*'

verbs:

- '*'

- apiGroups:

- apps

resources:

- '*'

verbs:

- '*'

- apiGroups:

- extensions

resources:

- '*'

verbs:

- '*'

- apiGroups:

- batch

resources:

- '*'

verbs:

- '*'

- apiGroups:

- rbac.authorization.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- apiregistration.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- apiextensions.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- tenant.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- certificates.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- devops.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- monitoring.coreos.com

resources:

- '*'

verbs:

- '*'

- apiGroups:

- logging.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- jaegertracing.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- storage.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- admissionregistration.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- policy

resources:

- '*'

verbs:

- '*'

- apiGroups:

- autoscaling

resources:

- '*'

verbs:

- '*'

- apiGroups:

- networking.istio.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- config.istio.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- iam.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- notification.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- auditing.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- events.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- core.kubefed.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- installer.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- storage.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- security.istio.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- monitoring.kiali.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- kiali.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- networking.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- kubeedge.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- types.kubefed.io

resources:

- '*'

verbs:

- '*'

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: ks-installer

subjects:

- kind: ServiceAccount

name: ks-installer

namespace: kubesphere-system

roleRef:

kind: ClusterRole

name: ks-installer

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

app: ks-install

spec:

replicas: 1

selector:

matchLabels:

app: ks-install

template:

metadata:

labels:

app: ks-install

spec:

serviceAccountName: ks-installer

containers:

- name: installer

image: kubesphere/ks-installer:v3.1.1

imagePullPolicy: "Always"

resources:

limits:

cpu: "1"

memory: 1Gi

requests:

cpu: 20m

memory: 100Mi

volumeMounts:

- mountPath: /etc/localtime

name: host-time

volumes:

- hostPath:

path: /etc/localtime

type: ""

name: host-time

7.3.2 cluster-configuration.yaml

---

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.1.1

spec:

persistence:

storageClass: "" # If there is no default StorageClass in your cluster, you need to specify an existing StorageClass here.

authentication:

jwtSecret: "" # Keep the jwtSecret consistent with the Host Cluster. Retrieve the jwtSecret by executing "kubectl -n kubesphere-system get cm kubesphere-config -o yaml | grep -v "apiVersion" | grep jwtSecret" on the Host Cluster.

local_registry: "" # Add your private registry address if it is needed.

etcd:

monitoring: true # Enable or disable etcd monitoring dashboard installation. You have to create a Secret for etcd before you enable it.

endpointIps: 172.31.0.4 # etcd cluster EndpointIps. It can be a bunch of IPs here.

port: 2379 # etcd port.

tlsEnable: true

common:

redis:

enabled: true

openldap:

enabled: true

minioVolumeSize: 20Gi # Minio PVC size.

openldapVolumeSize: 2Gi # openldap PVC size.

redisVolumSize: 2Gi # Redis PVC size.

monitoring:

# type: external # Whether to specify the external prometheus stack, and need to modify the endpoint at the next line.

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090 # Prometheus endpoint to get metrics data.

es: # Storage backend for logging, events and auditing.

# elasticsearchMasterReplicas: 1 # The total number of master nodes. Even numbers are not allowed.

# elasticsearchDataReplicas: 1 # The total number of data nodes.

elasticsearchMasterVolumeSize: 4Gi # The volume size of Elasticsearch master nodes.

elasticsearchDataVolumeSize: 20Gi # The volume size of Elasticsearch data nodes.

logMaxAge: 7 # Log retention time in built-in Elasticsearch. It is 7 days by default.

elkPrefix: logstash # The string making up index names. The index name will be formatted as ks-<elk_prefix>-log.

basicAuth:

enabled: false

username: ""

password: ""

externalElasticsearchUrl: ""

externalElasticsearchPort: ""

console:

enableMultiLogin: true # Enable or disable simultaneous logins. It allows different users to log in with the same account at the same time.

port: 30880

alerting: # (CPU: 0.1 Core, Memory: 100 MiB) It enables users to customize alerting policies to send messages to receivers in time with different time intervals and alerting levels to choose from.

enabled: true # Enable or disable the KubeSphere Alerting System.

# thanosruler:

# replicas: 1

# resources: {}

auditing: # Provide a security-relevant chronological set of records,recording the sequence of activities happening on the platform, initiated by different tenants.

enabled: true # Enable or disable the KubeSphere Auditing Log System.

devops: # (CPU: 0.47 Core, Memory: 8.6 G) Provide an out-of-the-box CI/CD system based on Jenkins, and automated workflow tools including Source-to-Image & Binary-to-Image.

enabled: true # Enable or disable the KubeSphere DevOps System.

jenkinsMemoryLim: 2Gi # Jenkins memory limit.

jenkinsMemoryReq: 1500Mi # Jenkins memory request.

jenkinsVolumeSize: 8Gi # Jenkins volume size.

jenkinsJavaOpts_Xms: 512m # The following three fields are JVM parameters.

jenkinsJavaOpts_Xmx: 512m

jenkinsJavaOpts_MaxRAM: 2g

events: # Provide a graphical web console for Kubernetes Events exporting, filtering and alerting in multi-tenant Kubernetes clusters.

enabled: true # Enable or disable the KubeSphere Events System.

ruler:

enabled: true

replicas: 2

logging: # (CPU: 57 m, Memory: 2.76 G) Flexible logging functions are provided for log query, collection and management in a unified console. Additional log collectors can be added, such as Elasticsearch, Kafka and Fluentd.

enabled: true # Enable or disable the KubeSphere Logging System.

logsidecar:

enabled: true

replicas: 2

metrics_server: # (CPU: 56 m, Memory: 44.35 MiB) It enables HPA (Horizontal Pod Autoscaler).

enabled: false # Enable or disable metrics-server.

monitoring:

storageClass: "" # If there is an independent StorageClass you need for Prometheus, you can specify it here. The default StorageClass is used by default.

# prometheusReplicas: 1 # Prometheus replicas are responsible for monitoring different segments of data source and providing high availability.

prometheusMemoryRequest: 400Mi # Prometheus request memory.

prometheusVolumeSize: 20Gi # Prometheus PVC size.

# alertmanagerReplicas: 1 # AlertManager Replicas.

multicluster:

clusterRole: none # host | member | none # You can install a solo cluster, or specify it as the Host or Member Cluster.

network:

networkpolicy: # Network policies allow network isolation within the same cluster, which means firewalls can be set up between certain instances (Pods).

# Make sure that the CNI network plugin used by the cluster supports NetworkPolicy. There are a number of CNI network plugins that support NetworkPolicy, including Calico, Cilium, Kube-router, Romana and Weave Net.

enabled: true # Enable or disable network policies.

ippool: # Use Pod IP Pools to manage the Pod network address space. Pods to be created can be assigned IP addresses from a Pod IP Pool.

type: calico # Specify "calico" for this field if Calico is used as your CNI plugin. "none" means that Pod IP Pools are disabled.

topology: # Use Service Topology to view Service-to-Service communication based on Weave Scope.

type: none # Specify "weave-scope" for this field to enable Service Topology. "none" means that Service Topology is disabled.

openpitrix: # An App Store that is accessible to all platform tenants. You can use it to manage apps across their entire lifecycle.

store:

enabled: true # Enable or disable the KubeSphere App Store.

servicemesh: # (0.3 Core, 300 MiB) Provide fine-grained traffic management, observability and tracing, and visualized traffic topology.

enabled: true # Base component (pilot). Enable or disable KubeSphere Service Mesh (Istio-based).

kubeedge: # Add edge nodes to your cluster and deploy workloads on edge nodes.

enabled: true # Enable or disable KubeEdge.

cloudCore:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

cloudhubPort: "10000"

cloudhubQuicPort: "10001"

cloudhubHttpsPort: "10002"

cloudstreamPort: "10003"

tunnelPort: "10004"

cloudHub:

advertiseAddress: # At least a public IP address or an IP address which can be accessed by edge nodes must be provided.

- "" # Note that once KubeEdge is enabled, CloudCore will malfunction if the address is not provided.

nodeLimit: "100"

service:

cloudhubNodePort: "30000"

cloudhubQuicNodePort: "30001"

cloudhubHttpsNodePort: "30002"

cloudstreamNodePort: "30003"

tunnelNodePort: "30004"

edgeWatcher:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

edgeWatcherAgent:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

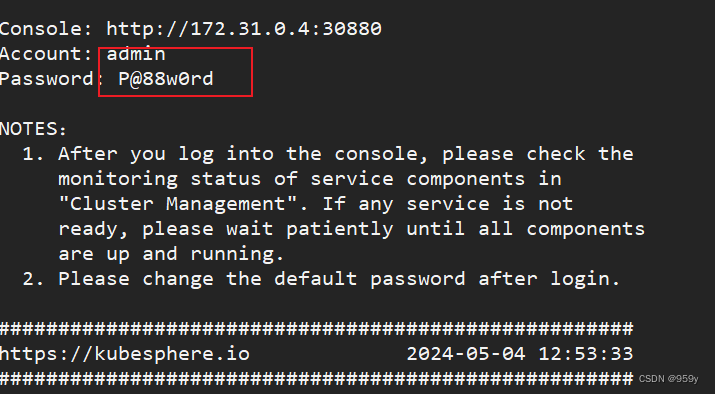

打印的日志最后的成功的说明:

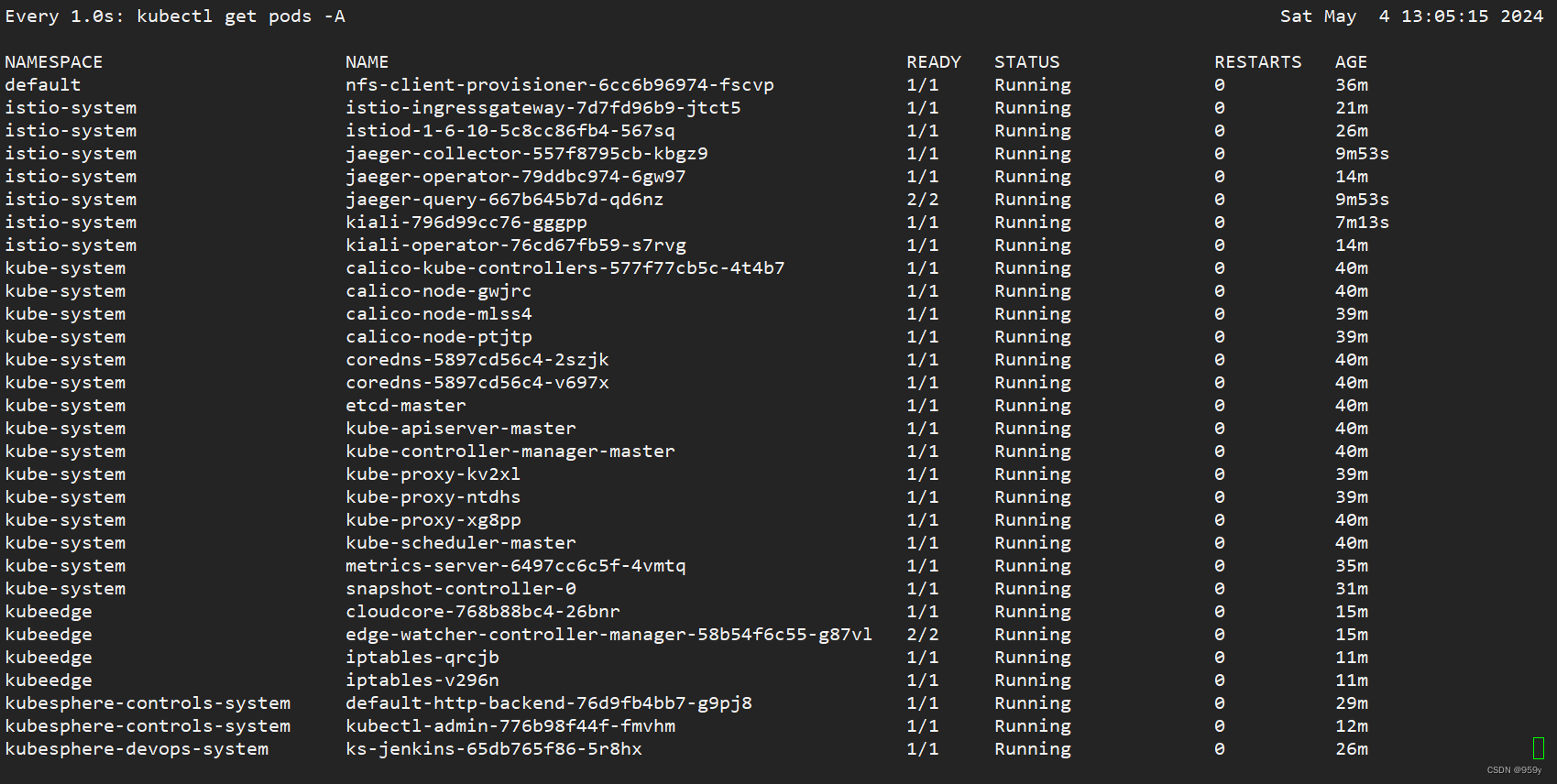

全部的pod都成功启动:

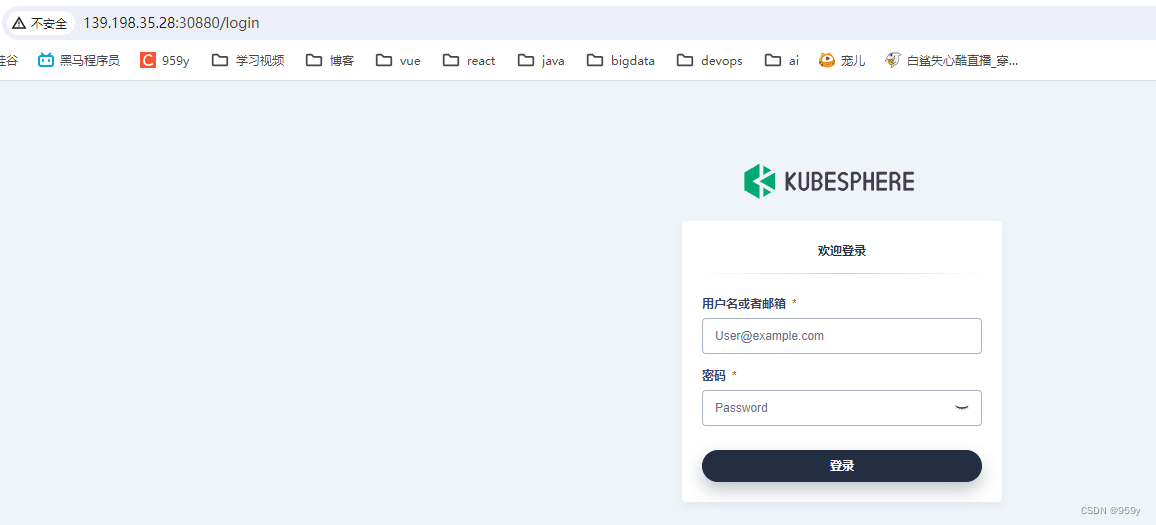

访问页面:

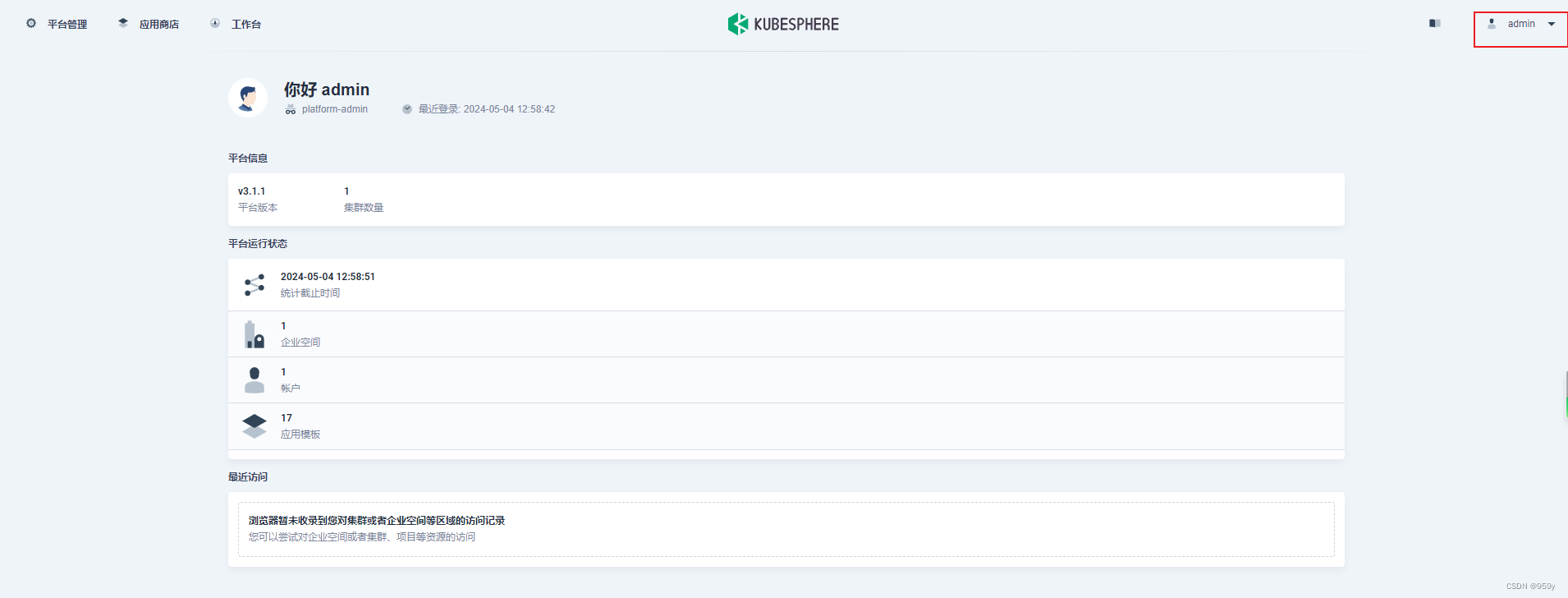

输入密码:

![[C++核心编程-02]----C++引用详解和使用方法分析](https://img-blog.csdnimg.cn/direct/962c8c50d2f545d296449d3351d609ff.png)